In the domain of data science, classification problems are everywhere. From identifying spam emails to diagnosing diseases, classification algorithms have transformed the way we make decisions. Understanding and visualizing decision boundaries offers significant insights into the behavior and performance of these algorithms.

A decision boundary is a significant concept, as it provides a visual interpretation of how different classification algorithms work. Selecting the right algorithm for a specific problem significantly impacts the classification performance, because no model is universally best for all problems. Therefore, it's imperative to compare different classification algorithms to understand which one has the best performance and generalization capacity.

In this blog post, I explore the concept of decision boundaries and compare the performance of three popular classification algorithms: K-Nearest Neighbors (KNN), Gaussian Naive Bayes, and Decision Tree. For more information about these models, refer to the respective posts here.

Understanding Decision Boundaries

A decision boundary, in the context of classification algorithms, is a hypersurface that effectively partitions the underlying feature space into two decision regions, each representing a different class. The geometry and complexity of these boundaries depend on the classification algorithm and can range from simple linear separators to convoluted hypersurfaces.

By visualizing these decision boundaries, one can grasp how different algorithms partition the feature space and understand their classification logic. A straight boundary might suggest that an algorithm is making predictions based on a single feature, while a more complex boundary may indicate the use of multiple features. Understanding the nature of these boundaries also helps in comprehending the model's robustness, susceptibility to noise, and its potential for overfitting or underfitting.

Classification Algorithms to Compare

K-Nearest Neighbors: It's a simple and powerful classification method that works by comparing an unclassified sample with K similar samples in the training set. The unclassified sample is then assigned the most common class among its 'K' neighbors. KNN's decision boundaries can vary greatly with the choice of 'K' and distance metric, providing a flexible way to classify complex datasets. More information on this algorithm can be found here.

Gaussian Naive Bayes: These classifiers are based on applying Bayes' theorem, with strong independence assumptions between the features. It assumes that the data from each label is drawn from a simple Gaussian distribution. Despite its simplicity, Gaussian Naive Bayes can be surprisingly effective and is especially suitable for datasets with many features. It tends to produce decision boundaries that are quadratic. Refer to this link for more details.

Decision Tree: A decision tree is a flowchart-like structure where each internal node denotes a test on an attribute, each branch represents the outcome of a test, and each leaf node holds a class label. The decision boundaries are typically axis-aligned, partitioning the feature space into cuboids. Decision trees can handle both numerical and categorical data, making them a versatile choice for many classification problems. Learn more about decision trees here.

In the following section, I compare these three algorithms, examining their decision boundaries and computing their performance in terms of accuracy.

Visualizing Decision Boundaries

To visualize the decision boundary I implemented the machine learning models in Python using the scikit-learn library. I also developed functions to plot the decision boundaries and compute the accuracy scores of the models.

Importing Necessary Libraries

First, we need to import the essential Python libraries that were used throughout the project.

# Import necessary libraries

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from matplotlib.colors import ListedColormap

import matplotlib.patches as mpatches

from sklearn.neighbors import KNeighborsClassifier

from sklearn.naive_bayes import GaussianNB

from sklearn.tree import DecisionTreeClassifier

from sklearn import datasets

from sklearn.model_selection import cross_val_score

Here we imported the libraries pandas and numpy for handling and manipulating data, matplotlib for creating plots, sklearn for the machine learning algorithms, metrics and datasets.

Defining functions

We developed two functions: DecisionBoundaries and Cal_Acc.

The function DecisionBoundaries plots the decision boundaries for different classifiers on various datasets. It creates a grid of subplots where each row represents a specific dataset and each column represents a classifier. The first column in each row presents the datasets with their true labels.

# Function to Plot Decision Boundaries

def DecisionBoundaries(model_names, models, arr_datasets, arr_labels, size):

h = 0.02 # step size in the mesh

fig, axs = plt.subplots(len(arr_datasets), len(model_names) +1, figsize = size, facecolor='#F5F5F5')

# Defining colormaps for visualizing the decision boundaries

points_colormap = ListedColormap(['#FF0000', '#00FF00'])

background_colormap = ListedColormap(['#FFAAAA', '#c2f0c2'])

# Defining patches for the legend

class0 = mpatches.Patch(color='#FF0000', label='0')

class1 = mpatches.Patch(color='#00FF00', label='1')

# Iterate through each dataset

for i, (dataset, labels) in enumerate(zip(arr_datasets, arr_labels)):

# Setting limits for the meshgrid

x_min, x_max = dataset[:, 0].min() - 0.1*abs(dataset[:, 0].min()), dataset[:, 0].max() + 0.1*abs(dataset[:, 0].max())

y_min, y_max = dataset[:, 1].min() - 0.1*abs(dataset[:, 1].min()), dataset[:, 1].max() + 0.1*abs(dataset[:, 1].max())

xx, yy = np.meshgrid(np.arange(x_min, x_max, h), np.arange(y_min, y_max, h))

# Displaying input data

axs[i,0].set_facecolor('#F5F5F5')

scatter = axs[i,0].scatter(dataset[:, 0], dataset[:, 1], c=labels, cmap=points_colormap, edgecolor='k')

axs[i,0].legend(handles=scatter.legend_elements()[0], labels=['0', '1'], title = 'Classes')

if i == 0:

axs[i,0].set_title("Input Data")

# Iterate through each model

for j, (name, model) in enumerate(zip(model_names, models)):

# Applying the model to generate decision regions

model.fit(dataset, labels)

decision_boundary = model.predict(np.c_[xx.ravel(), yy.ravel()])

# Plotting the decision boundaries

decision_boundary = decision_boundary.reshape(xx.shape)

axs[i,j+1].set_facecolor('#F5F5F5')

axs[i,j+1].pcolormesh(xx, yy, decision_boundary, cmap=background_colormap)

# Plotting the data points

scatter = axs[i,j+1].scatter(dataset[:, 0], dataset[:, 1], c=labels, cmap=points_colormap, edgecolor='k')

axs[i,j+1].legend(handles=scatter.legend_elements()[0], labels=['0', '1'], title = 'Classes')

# Adding title to each subplot

if i == 0:

axs[i,j+1].set_title(name)

The Cal_Acc function computes the average accuracy of each model on each dataset using 10-fold cross-validation. It stores the accuracy results in a pandas DataFrame for easier visualization and interpretation.

# Function to Calculate Average Accuracies using K-Fold Cross Validation

def Cal_Acc(model_names, models, dataset_names, arr_datasets, arr_labels):

Accuracies = np.zeros((len(arr_datasets), len(model_names)))

# Iterate through each dataset

for i, (dataset, labels) in enumerate(zip(arr_datasets, arr_labels)):

# Iterate through each model

for j, model in enumerate(models):

# Calculating cross-validation accuracy and storing in an array

acc = cross_val_score(model, dataset, labels, cv=10, scoring='accuracy').mean()

Accuracies[i, j] = round(acc, 5)

# Generating a DataFrame from the accuracy array

df_accuracies = pd.DataFrame(Accuracies, columns = model_names)

df_accuracies.insert(0, "Datasets", dataset_names, True)

return df_accuracies

Dataset Generation

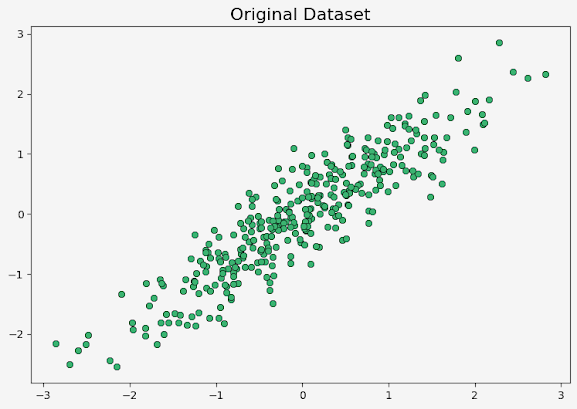

We created three synthetic datasets, each presenting a different type of distribution and classification challenge: concentric circles (make_circles), half-moon shapes (make_moons), and two blobs (make_blobs). Each dataset have a corresponding set of labels.

# Generating Datasets

Dataset1, Labels1 = datasets.make_circles(n_samples=600, noise=0.13, factor = 0.5, random_state=0)

Dataset2, Labels2 = datasets.make_moons(n_samples=600, noise=0.22, random_state=0)

Dataset3, Labels3 = datasets.make_blobs(n_samples=600, centers = 2, cluster_std=1.3, random_state=0)

Then, we grouped them together along with their labels.

# Grouping Datasets

arr_Datasets = [Dataset1, Dataset2, Dataset3]

arr_Labels = [Labels1, Labels2, Labels3]

dataset_names = ["Dataset 1", "Dataset 2", "Dataset 3"]

Defining Machine Learning Models

We defined the classification models: K-Nearest Neighbors (KNN), Gaussian Naive Bayes, and Decision Tree.

# Defining Models

KNN_model = KNeighborsClassifier(n_neighbors=5)

NaiveBayes_model = GaussianNB()

DecisionTree_model = DecisionTreeClassifier(criterion = 'gini', splitter = 'best', random_state = 0)

We also grouped the models in an array.

# Grouping Models

models = [KNN_model, NaiveBayes_model, DecisionTree_model]

model_names = ["K-Nearest Neigbohrs", "Gaussian Naive-Bayes", "Decision Tree"]

Results

We used the functions to plot the decision boundaries and calculate the accuracies of the models applied to each dataset generated in previous sections.

# Plotting Decision Boundaries

DecisionBoundaries(model_names, models, arr_Datasets, arr_Labels, size = (18,10))

# Calculating Average Accuracies

accuracies = Cal_Acc(model_names, models, dataset_names, arr_Datasets, arr_Labels)

The graph containing the decision boundaries of each model and dataset is shown below:

The results for the accuracy scores of the models applied to each dataset were:

Conclusion

Understanding decision boundaries provides valuable insights into the behavior of classification models. It reveals how each model draws the 'line' between different classes, which can be a crucial factor in certain applications. In this blog post, we explored decision boundaries and implemented popular classification models, namely K-Nearest Neighbors (KNN), Gaussian Naive Bayes, and Decision Trees, to understand their decision-making process.

Through a comparative study, we demonstrated that each model has its strengths and weaknesses, and their performance can significantly vary depending on the complexity and distribution of the data. This underlines the importance of comprehending the underlying mechanisms of these models and applying this understanding in the model selection process.

.png)

.png)